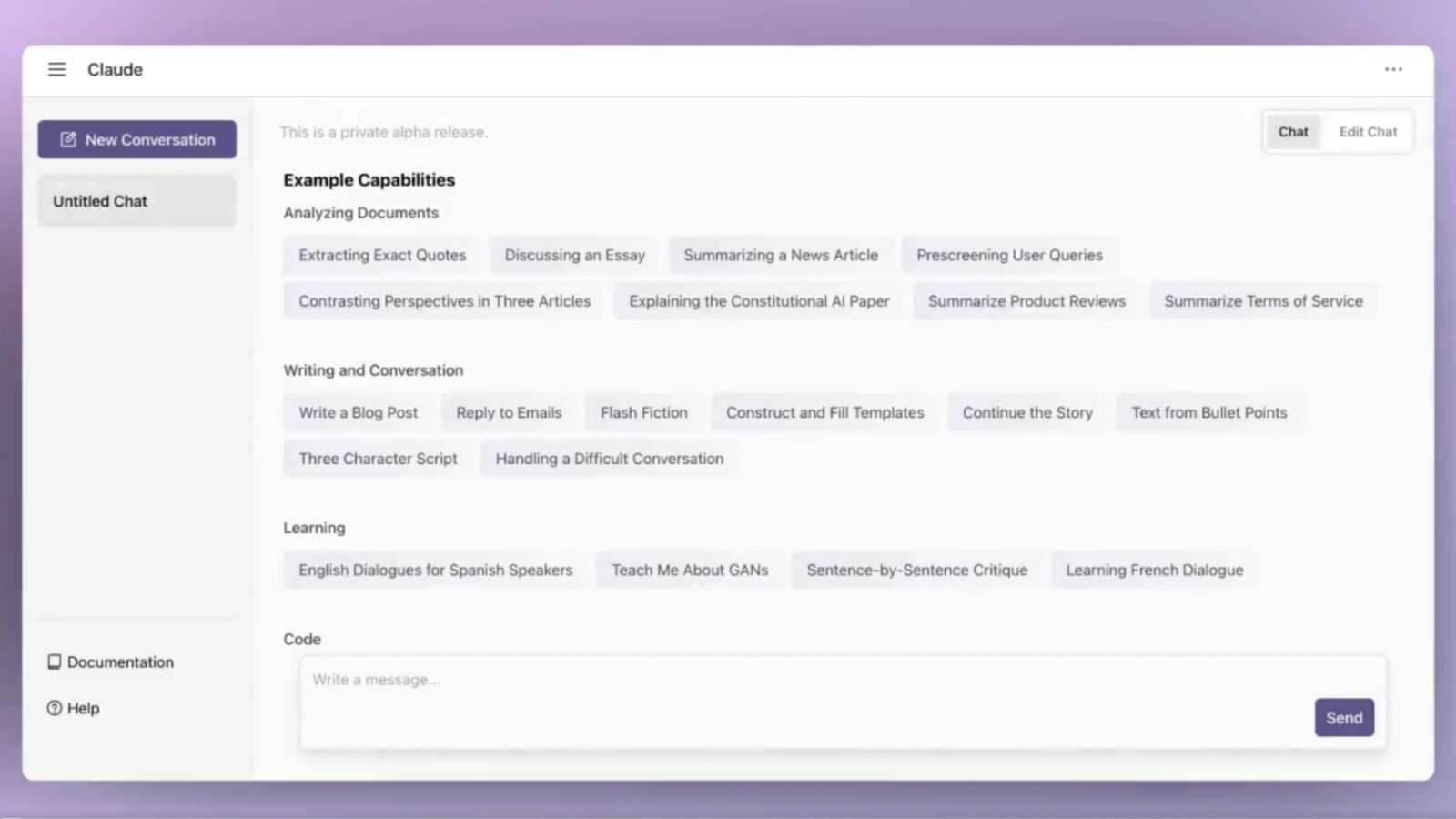

Claude by Anthropic AI set to rival ChatGPT as a ‘safer choice for businesses’

There seems to be a rival AI chatbot to ChatGPT. Well, Anthropic AI launched Claude, claiming that it can do everything ChatGPT can, except it avoids “harmful outputs.”

In case you didn’t know, ChatGPT has a serious problem with “jailbreaking”. It tricks the AI chatbot into breaking its rules. Hence, users could trick it into producing offensive comments or dangerous information.

On the other hand, in contrast, Anthropic AI says it extensively trained Claude to avoid such problems. Subsequently, it could become a safer choice for businesses. The co-founders of the company were ex-OpenAI employees. This means that they have experience in creating AI chatbots like ChatGPT.

After working for the past few moths with key partners like @NotionHQ, @Quora, and @DuckDuckGo, we’ve been able to carefully test out our systems in the wild. We are now opening up access to Claude, our AI assistant, to power businesses at scale. pic.twitter.com/m0QFE74LJD

— Anthropic (@AnthropicAI) March 14, 2023

For this reason, their Claude AI has similar features. Think of writing, coding, searching across documents, summarizing, and answering questions.

Meanwhile, OpenAI launched an API so that businesses can incorporate it into their digital systems. In turn, they can launch new products and services.

For example, Snap was one of the first companies that gained access to ChatGPT API. It used the tool to launch an AI companion called “My AI” on Snapchat.

As already stated, ChatGPT has safeguards to ensure it does not produce harmful or offensive comments. Nevertheless, many people took it as a challenge to trick the AI.

If you are wondering how did Anthropic AI prevent this problem, it actually used a technique called constitutional AI. Making 10 principles, they serve as a ”constitution” for its chatbot.

The tech firm said it based the laws on three concepts. The first is beneficence or maximizing positive impact; the second is nonmaleficence or avoiding giving harmful advice; and the third is autonomy or respecting freedom of choice.

Then, it made an AI separate from Claude to answer questions while referring to these principles. Afterward, that same system chose the answers that closely corresponded to the constitution. Anthropic AI used the final results to train Claude.

Raphael is a person born between the generations of Millenial and Gen Z. He was produced by Cavite State University (Main Campus) with a bachelor's degree in Political Science. The lad has a fresh take on things, but can still stay true to his roots. He writes anything in Pop Culture as long as it suits his taste (if it doesn't, it's for work). He loves to wander around the cosmos and comes back with a story to publish.